Is Sitemap Important for SEO? Here’s What You Must Know

If your website were a city, a sitemap would be its subway map. Without it, search engine crawlers would be stuck wandering alleys and guessing which pages lead where. Sounds inefficient, right?

Still think a sitemap is just a “nice-to-have”? That’s a myth worth busting. While not every site needs one, for many, it’s the secret ingredient that helps Google find, crawl, and index the right content faster and more accurately.

So, why are XML sitemaps important? And when does skipping one actually hurt your SEO efforts?

Keep reading. The answer might surprise you and save your site’s SEO from getting lost in the shuffle.

What is a Sitemap?

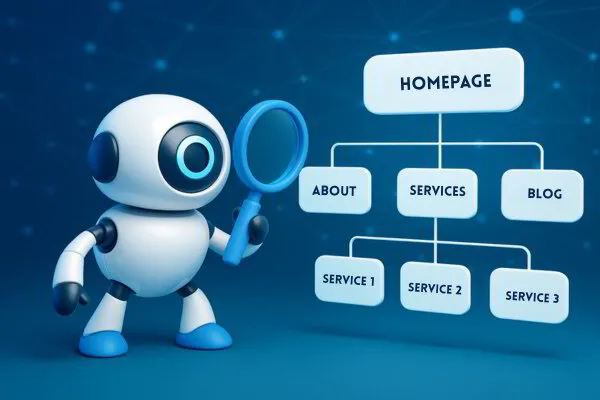

Think of a sitemap as the blueprint of your website. Just like an architect outlines every room in a building, a sitemap maps out all the important pages and files that make up your site. This file helps search engines understand your site’s structure so they can crawl and index your content with more precision.

Technically speaking, a sitemap is a file that lists your site’s key URLs. It may also include extra details like when a page was last updated, how often it changes, and whether there are alternate versions available in different languages. This kind of information helps search engine crawlers, like those from Google or Bing, decide how to prioritize and organize your site in search results.

Sitemaps generally come in two main formats. First is the XML sitemap, which speaks directly to search engines. It serves up important data in XML format that helps bots crawl and index your site efficiently.

If you run a large eCommerce store or news site that publishes fresh content regularly, an XML sitemap can be a game-changer. It ensures search engine crawlers find everything from product listings to recent blog posts without missing a beat.

The second type is the HTML sitemap, which is built with humans in mind. This version creates a simple, clickable list of your site’s pages, allowing visitors to find what they need quickly without getting lost in dropdown menus or endless scrolling. For example, a service-based business might use an HTML sitemap to highlight category pages like “SEO Services,” “PPC Management,” or “Local Marketing.”

Although not every website is required to have a sitemap, skipping one could limit your visibility, especially if your site is new, has complex navigation, or includes rich media content like videos and news articles. It’s a straightforward step that adds SEO value and improves both user and crawler experience.

So while it might seem like a technical detail, a sitemap quietly plays a major role in how well your content gets found, indexed, and shown to the right audience.

How Search Engines Use Sitemaps?

Search engines depend on internal links to navigate between pages as they crawl a website. But not all pages are easy to find this way. Some may be buried deep within the site structure or simply lack proper linking. This is where an XML sitemap becomes a practical tool.

Search engines don’t just stumble onto your pages by chance. When you use tools like Google Search Console or Bing Webmaster Tools to submit a sitemap, you’re giving them a clear path to follow. This file lists key URLs across your site, including newer or hard-to-find pages that may not be well connected through internal links.

Along with the URLs, search engines also read the metadata—details like when a page was last updated or how frequently it changes, which helps them decide how to prioritize crawling.

Sitemaps are especially useful for large websites, e-commerce platforms, or content-heavy blogs where certain pages may be isolated or underlinked. They can also help surface new pages faster, allowing them to be crawled and indexed without delay.

For websites using video sitemap or image sitemap files, additional details such as video duration or image location offer even more clarity to search robots.

While a sitemap doesn’t guarantee every URL will appear in search results, it does improve the chances of getting more of your website properly indexed. It acts as a companion to your internal link structure, not a replacement.

When used correctly, it supports the crawl and index process while reinforcing which pages offer the most SEO value.

Why Sitemaps Are Important for SEO?

Direct and Indirect SEO Benefits of Sitemaps

When you publish a new page, it doesn’t always show up in search results right away. If that page isn’t linked from anywhere obvious, search engines might miss it entirely. A sitemap helps close that gap. It gives search engine crawlers a clear list of your most important URLs so nothing gets left behind.

For larger sites—or even smaller ones with deep navigation—this matters a lot. An XML sitemap improves coverage by helping bots discover pages that might be buried in category folders, archives, or blog sections that don’t get much internal linking.

There’s also the matter of crawl efficiency. Google and Bing don’t crawl every site endlessly. They use a crawl budget, and your sitemap helps guide them to what actually matters. Instead of wasting time on duplicate content or low-priority pages, crawlers focus on your core content—your services, product pages, or newly published posts.

Beyond that, sitemaps give structure. When search engines can see how your pages are connected and which ones you consider valuable, it helps them better understand your site hierarchy. That can lead to more accurate indexing and stronger rankings over time.

HTML sitemaps have their own role. These are built for people, not bots. They help visitors find content without digging through menus or relying on search bars. A cleaner user experience often means more time on site, and that’s a factor search engines notice too.

In the end, sitemaps work behind the scenes to boost your SEO from multiple angles. They improve crawlability, guide search engine attention, and help both users and bots find what they need without friction.

Boosting Indexability and Visibility in Search Results

Search engines don’t guess which pages to rank—they crawl what they can find. If your content is tucked away in a deep subfolder or doesn’t have many internal links pointing to it, there’s a good chance it’ll be missed. That’s where sitemaps become a powerful asset, especially when speed and visibility matter.

An XML sitemap acts like a curated list of your site’s key pages—products, articles, landing pages—delivered directly to search engine crawlers. Even if those pages are several layers deep or recently added, they don’t have to wait in line. By submitting a sitemap to Google Search Console or Bing Webmaster Tools, you’re giving crawlers a shortcut to that content, bypassing the usual delays of traditional discovery through links.

Large websites benefit the most from this. Imagine a retailer like Wayfair adding hundreds of new product listings in a single day. Without a sitemap, it could take weeks for search engines to stumble upon those pages. With one, indexing happens much faster, often within hours.

The same holds true for fast-paced news platforms like CNN or The New York Times. Their news sitemaps ensure breaking stories get indexed almost immediately, which is essential for staying visible on Google News and in trending search results.

Even if your site isn’t publishing at the scale of Amazon or the BBC, the concept still applies. A blog post buried in a rarely visited tag archive, or a new service page without any backlinks, gains faster visibility when included in a properly structured sitemap.

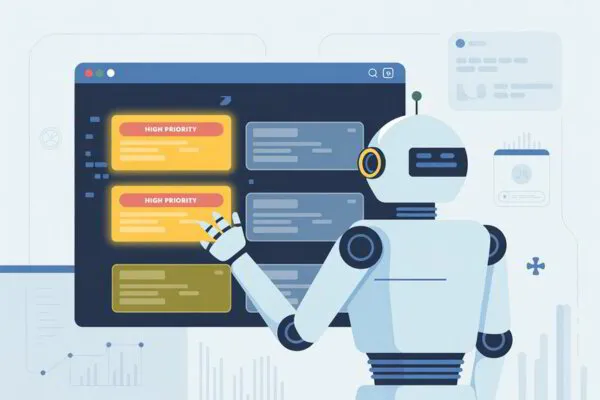

Prioritizing Pages for Crawling

Search engines have limited time and resources to spend on your site. So the question is: how do you quietly let them know which pages deserve their attention first? That’s where the <priority> tag in your XML sitemap steps in.

This tag allows a website owner to assign a numerical value to each URL—from 0.0 to 1.0—indicating its relative importance. A score of 1.0 tells search engine crawlers, “This page matters most,” while lower scores suggest the content is less critical. For example, a homepage or a frequently updated service page might carry a value of 1.0, whereas an outdated policy page could be closer to 0.3.

While Google doesn’t strictly obey these signals, it does consider them as hints when deciding how often to crawl and index your site’s pages. The goal isn’t to assign high priority across the board, but to help search engines crawl and index business-critical content more efficiently.

Good sitemap hygiene involves being selective.

Not every page needs to compete for top priority. Use higher values for pages that change often, generate traffic, or support your SEO efforts directly, such as blog post hubs, category pages, or key landing pages. Meanwhile, static content, legal disclaimers, or seasonal promotions that rarely update can safely hold lower priority.

Even though the <priority> tag is just one element in a sitemap file, it plays a subtle role in improving your website’s visibility. When combined with a clear internal linking structure and properly linked content, it supports better crawl decisions across all your pages.

Providing Temporal Information (lastmod, changefreq Tags)

Not all content stays relevant forever. Some pages evolve with time, while others remain untouched for years. To help search engine crawlers make smarter decisions, your sitemap can include two powerful tags: <lastmod> and <changefreq>.

The <lastmod> tag acts like a timestamp for your content. It shows the exact date a page was last updated, which gives crawlers a reason to revisit and potentially re-index that page. Whether you’re editing a product description or updating a blog post with new stats, this tag helps make sure your changes aren’t overlooked.

Then there’s <changefreq>, which offers a general idea of how often a page is expected to change. You’re not setting a timer—you’re giving search engines a nudge. Pages that rarely change, like terms and conditions, can be marked “yearly” or “never.”

More dynamic pages, like news articles or product listings, might be set to “daily” or “weekly” depending on how often they’re refreshed.

These tags work together to improve how search engines crawl and index your website’s content. Instead of treating all your pages the same, they allocate attention based on real activity. That means new pages and recently edited ones are more likely to be discovered sooner—something that can improve your visibility in search engine results pages.

For site owners managing lots of content, especially news or ecommerce platforms, including accurate lastmod dates and logical changefreq estimates makes it easier for platforms like Google to find timely updates and boost your website’s visibility without wasting crawl budget.

Reducing Crawl Budget Waste and Improving Crawl Allocation

Every website gets a limited slice of a search engine’s attention. The larger your site, the more important it becomes to make that slice count. This limited attention is known as your crawl budget—the number of pages search engines like Google will crawl and index during a given period.

For websites with hundreds or thousands of pages, especially those that publish regularly or manage large product catalogs, crawl budget plays a direct role in how quickly new content reaches search engine results pages.

If crawlers spend time revisiting unchanged or low-priority pages, important updates may sit undiscovered, hurting both visibility and performance.

A well-structured XML sitemap helps avoid that waste. By clearly listing your website’s most valuable pages—like recently added blog posts, updated service pages, or high-traffic category sections—you help search engine crawlers focus their efforts where it matters.

Instead of revisiting static terms-and-conditions pages or outdated listings, bots are guided to content that’s more relevant to search engine optimization goals.

This targeted crawling approach not only improves how search engines crawl and index your site but also ensures your most critical updates are seen faster. Over time, that means fresher content showing up in search results and fewer missed opportunities due to crawl inefficiencies.

For website owners managing growing libraries of content, using your sitemap to highlight priority pages isn’t just good practice—it’s a smart strategy to improve your site’s SEO value without exhausting your crawl budget.

Mitigating Duplicate Content and Orphan Page Issues

Duplicate content can quietly harm your SEO without ever setting off alarms. It often shows up when similar or identical pages are accessible through different URLs. Instead of relying on guesswork, an XML sitemap tells search engines which version you prefer.

When a sitemap highlights canonical URLs, it reduces the risk of splitting authority across duplicate pages.

Then there are orphan pages—the content that exists on your site but isn’t linked anywhere else.

These pages don’t show up in navigation menus, and internal links don’t point to them. Without any paths leading in, search engines may never crawl them. Adding them to your sitemap changes that. It gives search engine crawlers a direct route to those forgotten pages, improving your site’s overall coverage.

A clean sitemap also helps streamline how search engines crawl and index your site. Instead of wasting time on outdated content or duplicate entries, crawlers can focus on pages with actual SEO value—your product listings, service pages, or fresh blog content.

If your site includes image sitemap entries, alternate language versions, or deep archive content, don’t leave them out. When everything is properly listed and structured, your site becomes easier to explore for both web crawlers and human visitors.

Best Practices for Creating and Maintaining Sitemaps

Structuring XML Sitemaps for Clarity and Effectiveness

When a website starts to grow, so does the challenge of helping search engines find and understand all your pages. One of the simplest ways to stay organized—and visible—is to structure your XML sitemap with purpose, not just automation.

For large websites, grouping similar content into separate sitemap files makes a real difference.

Creating a post sitemap for your blog articles, a dedicated sitemap for product pages, and another for media files helps search engine crawlers move through your content more efficiently. This kind of segmentation makes your sitemap easier to manage and faster to update, especially when paired with a sitemap index file that ties everything together.

Clarity is key. Every URL listed should be a canonical URL—no duplicates, no redirects. Make sure your XML file is encoded in UTF-8 to avoid rendering issues and formatting errors. When search engines crawl your sitemap, they need clean, accurate paths to follow, not broken links or outdated URLs.

Your sitemap should reflect the internal logic of your website. Including internal links that support your main navigation structure helps reinforce which pages are important. Adding a few external links—like references to trusted sources or partner pages—can also strengthen your sitemap’s role in content discovery.

It’s also worth remembering that XML sitemaps don’t guarantee indexing. What they do is improve the odds. They guide search engines to pages that might otherwise go unnoticed, like orphan pages with no inbound links or recently added content that hasn’t yet earned visibility.

Think of your sitemap as a directional signpost—not a demand, but a helpful suggestion. The better it’s structured, the easier it becomes for search engines to crawl and index the pages that matter most.

Keeping the Root Directory Organized

Think of your website’s root directory like the front desk of a busy office. It’s where every request is processed, routed, and handled—and when it’s cluttered, everything slows down.

Your sitemap deserves a place right at the front. Storing your XML sitemap file in the root directory of your domain (e.g., example.com/sitemap.xml) makes it easier for search engine crawlers to locate it without extra effort. It’s the most direct route for search engines to begin indexing the important pages on your site.

To make the process even smoother, add a reference to your sitemap in the robots.txt file. This small step improves discoverability by ensuring that search robots find your sitemap even if they miss it in the usual crawl paths. It’s a quiet but powerful way to strengthen your site’s visibility.

At the same time, keep the root directory lean. Stacking it with unnecessary files—old scripts, unused assets, duplicate sitemap versions—can make your website harder to manage and potentially affect load performance.

A well-organized root structure not only supports crawl and index efforts but also contributes to a more efficient and secure website overall.

Treat the root like prime real estate. Only place what matters, and make sure your sitemap has a clear and accessible home.

Updating Sitemaps Regularly to Reflect Site Changes

A sitemap isn’t a one-time setup—it’s a living part of your website. If your site changes often but your sitemap doesn’t, you’re basically handing search engines an outdated map and expecting them to find their way.

Sites that publish fresh content—like blog posts, new product listings, or updated landing pages—need a sitemap that keeps up.

Whether you generate it dynamically or update it manually, every change should be reflected. If a new page is added, it belongs in the sitemap. If a page is removed or redirected, it shouldn’t stay listed. Keeping your sitemap in sync helps search engines crawl and index your site more accurately.

Neglecting this can create problems. An outdated sitemap full of broken links or missing updates leads to wasted crawl budget and poor indexing decisions. Search engines may spend time on pages that no longer exist while missing new ones entirely.

Think of your sitemap like a digital inventory of your site’s content. When it’s up to date, search engine crawlers can navigate your site more efficiently, improving the chances that high-priority pages appear in search results. Regular maintenance—just like updating your internal links or checking for broken pages—should be part of your SEO routine.

It’s not about perfection, but consistency. An accurate, current sitemap keeps your website’s content visible, organized, and easier for both crawlers and visitors to find.

Avoiding Common Sitemap Mistakes (Broken Links, Outdated URLs, Excessive Size)

Even with the best intentions, sitemaps can quickly go from helpful to harmful if they’re not handled with care. A few overlooked details can make it harder—not easier—for search engines to crawl and index your content.

One of the most common mistakes is including broken links or URLs marked as “noindex.” These don’t belong in your sitemap. When search engine crawlers hit dead ends or are told not to index what’s listed, it wastes crawl budget and sends mixed signals about your site’s structure.

Another issue is sitemap size. XML sitemaps are capped at 50,000 URLs or 50MB uncompressed. Pushing past that limit without splitting into smaller files or using a sitemap index creates processing problems for crawlers and can cause valid URLs to be ignored entirely.

Outdated URLs are another silent killer. Leaving old, removed, or redirected pages in your sitemap only creates friction. Search robots end up crawling pages that no longer exist, missing the new ones you actually want indexed. That can slow down how quickly your updated content gets noticed and reduce your website’s overall crawl efficiency.

If you want your sitemap to work in your favor, treat it like a curated list—not just an automated dump of every URL you’ve ever created. The cleaner it is, the easier it is for search engines to understand your site’s structure and prioritize the pages that still matter.

Submitting Sitemaps to Google Search Console and Bing Webmaster Tools

Publishing your sitemap is only half the job. If search engines don’t know where to find it, all that effort could go unnoticed.

That’s why submitting your sitemap through tools like Google Search Console and Bing Webmaster Tools is a critical final step—and yes, it’s completely free.

Here’s how to do it on each platform, step by step:

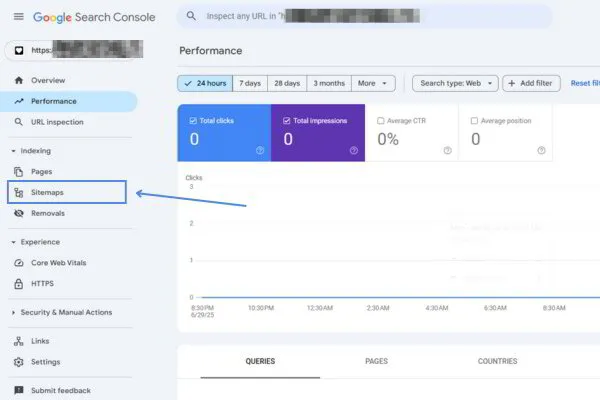

Submitting Your Sitemap to Google Search Console

- Log in to your Google Search Console account.

- Select the property (your website) you want to manage.

- In the left-hand menu, click on “Index” → “Sitemaps.”

- In the “Add a new sitemap” field, enter the end of your sitemap URL (for example, sitemap.xml).

- Click “Submit.”

After submission, the page will update to show your sitemap status, including when it was last read, how many URLs were discovered, and whether any errors exist.

Submitting Your Sitemap to Bing Webmaster Tools

- Log in to your Bing Webmaster Tools account.

- Choose the website property you want to work with.

- Click on “Sitemaps” in the menu.

- Hit the “Submit Sitemap” button.

- Enter your full sitemap URL (e.g., https://yourdomain.com/sitemap.xml) and confirm.

Bing will display submission status, crawl results, and any issues detected during indexing.

After Submission

Submitting your sitemap isn’t just a one-time task. It’s best to resubmit it whenever you publish new pages or make significant changes. This signals to search engine crawlers that your website’s content has been updated and is ready to be indexed.

Monitoring your sitemap through these tools also helps catch common problems—like blocked URLs, crawl errors, or formatting issues—that could impact your website’s visibility. Keeping an eye on these reports ensures your site remains accessible, indexable, and aligned with search engine optimization best practices.

It’s a simple process, but one that makes a measurable difference in how quickly and efficiently search engines crawl your site.

Differences Between XML and HTML Sitemaps

Search engine optimization isn’t just about keywords and backlinks—it’s also about clarity. And few tools bring more structure to your website than a sitemap. But not all sitemaps serve the same purpose.

That’s where XML and HTML sitemaps come into play. While they sound similar, each one supports a different audience and function. One speaks to bots, the other to people and when used together, they give your website a well-rounded edge.

Here’s a quick comparison to help you understand how each one works and why having both can amplify your SEO and user experience:

| Feature | XML Sitemap | HTML Sitemap |

| Format | Written in XML format; designed for machine readability | Written in HTML; designed for human visitors |

| Primary Audience | Search engine crawlers (e.g., Googlebot, Bingbot) | Human users looking to navigate your site |

| Content Includes | All URLs on your site, including metadata like lastmod, changefreq, priority | A list of clickable internal links that act as a directory or index |

| Update Process | Often auto-generated by SEO plugins or CMS systems | Usually manually created or generated through CMS functionality |

| SEO Benefit | Helps search engines crawl and index important pages faster and more accurately | Improves crawlability through internal links and supports user navigation |

| User Benefit | None directly (not visible to users) | Offers visitors an easy overview of your site’s structure |

| Placement | Stored in the root directory or referenced in robots.txt | Published as a public-facing page on the site |

| Use Case | Essential for large or complex sites, especially with rich media or deep architecture | Useful for accessibility and helping users find orphaned or buried pages |

Using both doesn’t create overlap—it creates balance. The XML sitemap ensures that search engines crawl and index your pages efficiently, while the HTML sitemap makes it easier for visitors to explore your content without friction.

If your goal is to improve both technical SEO and user experience, having both sitemaps in place is a smart, low-maintenance win.

How HTML Sitemaps Aid User Navigation and Internal Linking?

While XML sitemaps help search engines find your content, HTML sitemaps exist for the people actually visiting your site. On larger websites with deep menus and scattered content, finding the right page can take too many clicks.

A single, well-organized HTML sitemap cuts through that problem.

Instead of relying on drop-downs or endless scrolling, users get a bird’s-eye view of what your site offers—all on one page. For someone looking for an old article, a specific service, or a less-visible section, it offers a direct route that doesn’t depend on perfect site navigation.

Beyond convenience, this type of sitemap quietly strengthens your internal linking.

Each link listed reinforces the connection between pages, which helps guide search engine crawlers more effectively across your site. That extra structure improves how your pages are discovered, especially those that aren’t linked elsewhere or are buried several levels deep.

It’s not just about visibility either. Internal links share authority between pages. A sitemap acts like a traffic hub, helping distribute that value across your site so it’s not concentrated in just a few places. For sites with lots of content, that balance can influence how well pages perform over time.

An HTML sitemap doesn’t need to be complex to be effective. Its real strength is in offering clarity—both for your users and the bots that power search.

Conclusion

A sitemap won’t instantly shoot your site to the top of search results, but it will make sure your content is seen, crawled, and understood by the bots that matter. It’s not magic. It’s just smart structure.

Whether you’re running a massive ecommerce store, launching a new site, or regularly publishing fresh content, a well-maintained sitemap can quietly power up your SEO efforts. From highlighting important pages to uncovering orphaned ones, it helps search engines and users alike move through your website with fewer roadblocks.

Is it required? Not always. Is it worth doing right? Absolutely.

And if you’d rather not wrestle with sitemap tags, crawl budgets, and indexation issues on your own, Boba Digital has you covered. Our team knows how to turn technical tasks into real-world rankings.

Want your site crawled smarter, not harder? Talk to us—your sitemap (and your traffic) will thank you.

FAQs

Does having a sitemap guarantee my pages will be indexed by Google?

Having an XML sitemap improves your chances of getting web pages properly indexed, but it doesn’t guarantee it. Search engines like Google still evaluate your content based on quality, internal links, and overall website structure. A sitemap acts as a guide, helping search engine crawlers discover pages—especially new pages, orphan pages, or those buried deep in your site.

How often should I update my sitemap?

Your sitemap should reflect your website’s content in real time. If you’re regularly adding blog posts, updating product pages, or changing site structure, your XML sitemap needs to keep up. Using a dynamic sitemap or regularly updating your sitemap file helps search engines crawl and index fresh content more efficiently. Outdated sitemaps filled with broken links or removed URLs can waste crawl budget and hurt your website’s visibility in search engine results pages.

Can a sitemap help if my website is new or has few backlinks?

Absolutely. For new websites or sites with limited external links, a sitemap provides essential visibility. Since search engines crawl based on link signals, having a single XML sitemap listing all your important pages improves content discovery when internal links or external links are minimal. Submitting the sitemap through Google Search Console or Bing Webmaster Tools helps search robots find your content even if your domain hasn’t built up authority yet.

Can a sitemap improve my website’s rankings directly?

A sitemap won’t directly boost your position in search engine results pages, but it plays a critical supporting role. It ensures that search engines crawl and index your pages efficiently, especially important pages that impact your SEO value. Better crawlability leads to more consistent visibility for your content. While the sitemap itself isn’t a ranking factor, it helps keep your SEO efforts organized and your content properly indexed—both essential for long-term performance.

Should I include every page of my website in the sitemap?

No, not every page needs to be listed in your sitemap. Pages marked “noindex,” duplicates, or low-value pages like login screens or admin pages should be excluded. Your XML sitemap should highlight the most important pages that you want search engines to crawl and index, like blog posts, service pages, or product listings. This helps web crawlers use your crawl budget efficiently while focusing on the content that supports your search engine optimization goals.

How can I check if my sitemap is working properly?

The easiest way to check your sitemap is by submitting it to Google Search Console or Bing Webmaster Tools. These tools will report whether the sitemap file is valid, how many URLs were submitted, how many were indexed, and whether there are crawl errors or broken links. You can also open your sitemap in a browser to confirm that it lists canonical URLs, follows the XML format, and includes up-to-date information like lastmod and changefreq tags.